The websites that really have less page load time can satisfy their users and are also good for SEO (Search Engine Optimization). Because Search Engines like Google and Bing aim for ranking the best websites which not only have great content but also include a nicer user experience as well.

Understanding the best practice to optimize websites for speed can make a difference between the great and poor apps.

Today I will share with you how to optimize the website for speed and get better scores when testing websites with applications like WebPageTest, Google Page Speed Insights, Pingdom, and GTmetrix the most popular websites for testing your page load speed.

1. Enable GZip Compression

GZip is a kind of data compression-- i.e. it takes a chunk of data and makes it smaller. The original data can be restored by un-zipping the compressed file.

Because the HTTP protocol consists of the capability to gzip data that is being sent out, it is relevant to web apps and websites.

This means that, when it is in use, your bandwidth costs for serving the website will be lower because people visiting the website will be downloading smaller files.

There are a couple of cautions to using GZip, but, in general, it's normally better to use gzip than not to. For example, it does take some time and processor power to zip and unzip the files, but, normally, this is not an issue because the time it requires to do that is frequently less than the time that is saved by downloading a bigger file. The overall effect is time-saving regardless of the browser having to unzip the file.

Obviously, some files can be compressed more effectively than others, so the bandwidth saving will differ - text files like HTML give the best results; images are not compressed so much by gzip because they already have some compression built-in. Some files (e.g. those that are already greatly compressed like.zip files) might even get larger when gzipped because they can't be compressed any further but gzip still needs to include its metadata to the file.

To enable Gzip compression, we can enable it from our web server software like Apache, Nginx and etc.

For example: if you want to enable Gzip compression for Nginx you can config your nginx.conf by following this guide for enabling compression on Nginx.

If you develop a web application using Express.js, you can enable it by using compression middleware.

If you develop a web application using Express.js, you can enable it by using compression middleware.

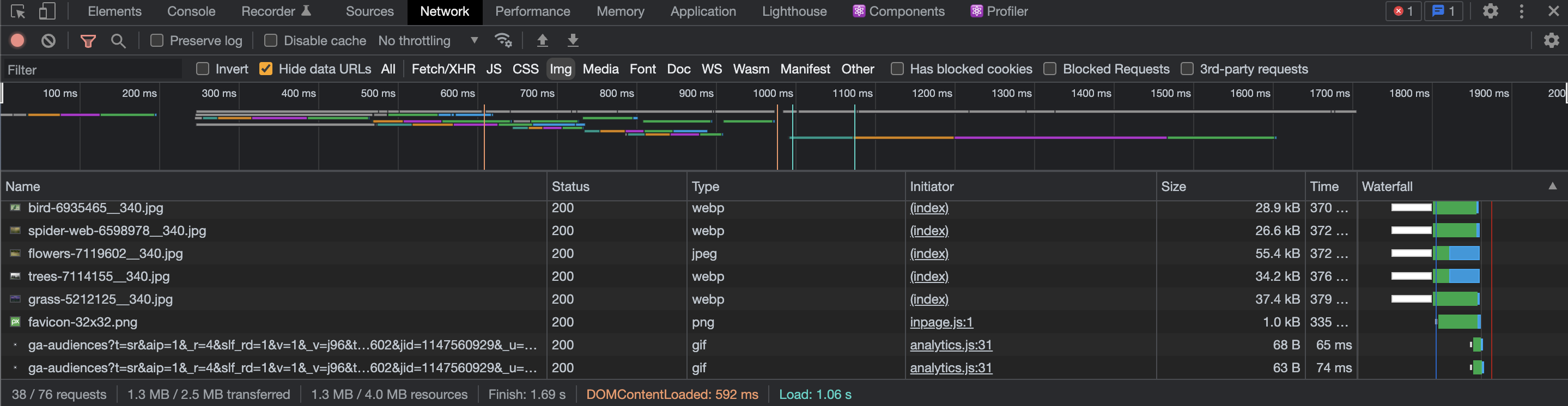

2. Use A Modern Image Format Like WEBP

Using a modern image format such as WEBP really reduce overall page load time. Because it is smaller than popular image formats such as PNG, JPEG by about 25% - 34%.

I usually create two formats of images. If I use PNG images somewhere on my web pages. I will convert the same image to WEBP format. We can convert it easily by using apps such as Adobe Photoshop or GIMP.

Then I embed the WEBP image with a fallback image. If the web browser does not support WEBP, it will use a fallback image instead.

Below is an example of how to use a WEBP image with a fallback image.

<picture>

<source srcset="image.webp" type="image/webp">

<source srcset="image.jpg" type="image/jpeg">

<img src="image.jpg">

</picture>

3. Properly Size Image

Using appropriate image sizes dramatically reduces the total page load time. We can use the larger image size for the desktop version website but can use smaller images for the mobile sites.

Nowadays, we design and develop websites that can have appropriate layouts depending on users' screen sizes. This technique is called "Responsive Web Design".

But in the context of speed optimization having the different layouts for each screen size isn't enough.

We should show appropriate image sizes depending on the user's screen size as well.

Showing the full-width image slider on a desktop or laptop might be a stunning result, but showing it on mobile devices is really terrible idea because it takes more time to load and consume the user's mobile data.

To overcome this issue, we need to create multiple image sizes and show them correctly using img's srcset attribute.

<img src="cat.png"

alt="A picture of a cat"

srcset="cat-320.png 320w,

cat-640.png 640w,

cat-922.png 922w,

cat-1200.png 1200w">

This approach is really easy, the browser will load the appropriate image size by the condition we provided.

You can learn more about responsive images here.

You can learn more about responsive images here.

4. Reduce the number of requests

Traditional web applications usually have a lot of script and style tags on their web pages. Some pages might have more than 20 linked CSS, Javascripts, and fonts.

It's important to note that browsers don't download all resources all at once.

Each browser has a number of default simultaneous persistent connections per server/proxy.

If they reach their maximum connections, the browser will wait until the current connections are done first.

For example: If you have 20 javascript files to be included on that page and your browser supports 10 simultaneous persistent connections, it will wait until one or more downloading files are done to continue downloading other files. During that time, the rest of your web page will be empty and gets blocked until the required resources are finished to download.

To fix this problem you will need to:

1) Only include the files which that page really needs. For example, if that page doesn't need to use a calendar widget, just don't include a calendar script on that page.

2) Create a bundle of javascript or stylesheet files. Best to merge all scripts into a single file. But if it's too large, you might consider to use a lazy-load technique - which depends on the javascript framework you use.

3) If there are a lot of images to show on web pages, you should consider a image lazy load technique. Which shows the empty space for retaining the layout and actually downloading those images when the user scrolls down nearby those images. There are a lot of image lazy load libraries on the internet. For example, this one is for vanilla javascript.

There are various techniques for lazy loading - some of which are supported by the major browsers by default.

5. Eliminate render-blocking resources

There is the term Above the fold which means the content that users can see first without scrolling down. And users should be able to see it even webpage didn't load completely.

The problem is the requests to stylesheets and javascript files might block this content to be rendered as I mentioned in the previous section as browsers have a maximum number of parallel connections. And it can block the rendering process as well.

So we need to put style & link tag inside <head> tag and put all javascript files before the </body> tag.

With this approach, the webpage can render partially before all files are completely downloaded.

<!DOCTYPE html>

<html>

<head>

<style>

# some style goes here.

</style>

<link href="xxx.css" rel="stylesheet" type="text/css" />

<link href="yyy.css" rel="stylesheet" type="text/css" />

<link href="zzz.css" rel="stylesheet" type="text/css" />

</head>

<body>

<!-- HTML content here -->

<script src="xxx.js"></script>

<script src="yyy.js"></script>

<script src="zzz.js"></script>

</body>

</html>

6. Reduce Initial Server Response Time

Initial Server Response Time is the time between the browser starting to request to the server and the server returning a response to the browser.

This load time depends on how the webserver process the data and how renders the data.

To reduce this load time, we have different approaches for both frontend and backend.

1) For the frontend application, we might decide to create a single page application in which users download it once and it can render and run on the user's browser without re-rendering multiple times. Future requests can be optimised to be smaller. But if we create a website using the server-side rendering technique, we should make use of page caching. A page cache is the file that stores the rendered results. If users request to specific URI the first time, it will have some delay but after that, If they request the same URI multiple times it will respond immediately by serving the page cache instead of processing and rendering the page all the time.

2) To reduce the response time of an API, consider making object or database caching. You should determine which part of your application you are able to cache. For example, the categories and types of data might not be changed too often, so you might cache them to reduce response time.

Conclusion

There are a lot of techniques to reduce the page load time, typically when you test with page speed testing tools they will give you a hint to resolve the issues.

The fundamental of speed optimization is just using appropriate resources and reducing unnecessary resources.

Understanding these practices, and implementing them from the beginning really saves a lot of time over optimising it later.

Ready to start your project? Contact Us