In the fast-paced world of modern software development, Continuous Integration (CI) and Continuous Deployment (CD) have become the backbone of agile workflows. These automated processes ensure faster delivery of high-quality software, enabling development teams to catch issues early, streamline testing, and automate deployment to various environments.

However, even with CI/CD pipelines in place, you may notice that some steps take longer than expected, especially when setting up dependencies. Waiting for these repeated steps, such as installing dependencies, can significantly slow down your development and deployment workflows. At OOZOU, we've adopted a caching strategy using GitHub Actions to dramatically reduce pipeline execution time, providing a more efficient CI/CD process.

However, even with CI/CD pipelines in place, you may notice that some steps take longer than expected, especially when setting up dependencies. Waiting for these repeated steps, such as installing dependencies, can significantly slow down your development and deployment workflows. At OOZOU, we've adopted a caching strategy using GitHub Actions to dramatically reduce pipeline execution time, providing a more efficient CI/CD process.

The Concern: What Slows Down CI/CD Pipelines?

Even with automation in place, not everything is lightning fast. One of the most common time sinks in CI/CD pipelines is the process of installing dependencies. When pipelines are executed multiple times or across multiple strategies, waiting for dependencies to install increases linearly with the number of strategies you're running. This results in longer build times, delayed testing, and slower deployments.

Imagine you have a matrix build strategy or multiple jobs running in parallel. In each job, dependencies need to be installed before tests or other steps can be executed. The repetitive nature of this task can become a major bottleneck, ultimately increasing the overall duration of your CI/CD pipeline.

So, how can we address this issue?

The Solution: Speeding Up CI/CD with Cache in GitHub Actions

At OOZOU, we implemented a caching strategy to solve this problem. By leveraging GitHub Actions, we can cache dependencies and reuse them across different pipeline runs, reducing the time spent on installing dependencies in every job. This allows for faster development and deployment cycles, ultimately improving the developer experience.

GitHub Actions provides access to a marketplace filled with various actions that can improve workflow efficiency. For instance, the actions/setup-node action sets up a Node.js environment in your pipeline. When paired with caching, this action can significantly improve workflow execution time by preventing redundant downloads and installations.

Our Initial CI Workflow Without Caching

Here's what a typical CI workflow might look like, without any caching mechanism:

Here's what a typical CI workflow might look like, without any caching mechanism:

name: CI without cache

on: workflow_dispatch

jobs:

ci:

name: Running CI

runs-on: ubuntu-latest

steps:

- name: Checkout code

uses: actions/checkout@v3.0.2

- uses: actions/setup-node@v3.1.1

with:

node-version: v16.14.2

- run: yarn install

### Run test/lint etc.

- run: yarn test

In this basic workflow, the code is checked out, the Node.js environment is set up, and then dependencies are installed using yarn install. Finally, the tests are run. While this approach works, it is inefficient, especially when the same dependencies are installed every time the pipeline runs. This can waste valuable time on repeated installations.

Introducing Caching to Speed Up the CI Workflow

To make the process more efficient, we can cache the dependencies. By simply adding two additional lines, we can drastically improve the speed of the CI workflow.

Here’s the updated version with caching enabled:

name: CI cache

on: workflow_dispatch

jobs:

ci:

name: Running CI

runs-on: ubuntu-latest

steps:

- name: Checkout code

uses: actions/checkout@v3.0.2

- uses: actions/setup-node@v3.1.1

with:

node-version: v16.14.2

cache: 'yarn'

# Useful for caching dependencies in monorepos

cache-dependency-path: yarn.lock

- run: yarn install

- run: yarn test

NOTE: Now actions/setup-node has native supports for caching

Key Additions:

- cache: 'yarn': Enables caching for yarn dependencies.

-

cache-dependency-path: yarn.lock: Ensures that the cache is based on the yarn.lock file, so the cache is updated if the dependencies change.

By using this cache setup, GitHub Actions automatically stores and retrieves the cached dependencies, reducing the time spent on running yarn install in subsequent builds.

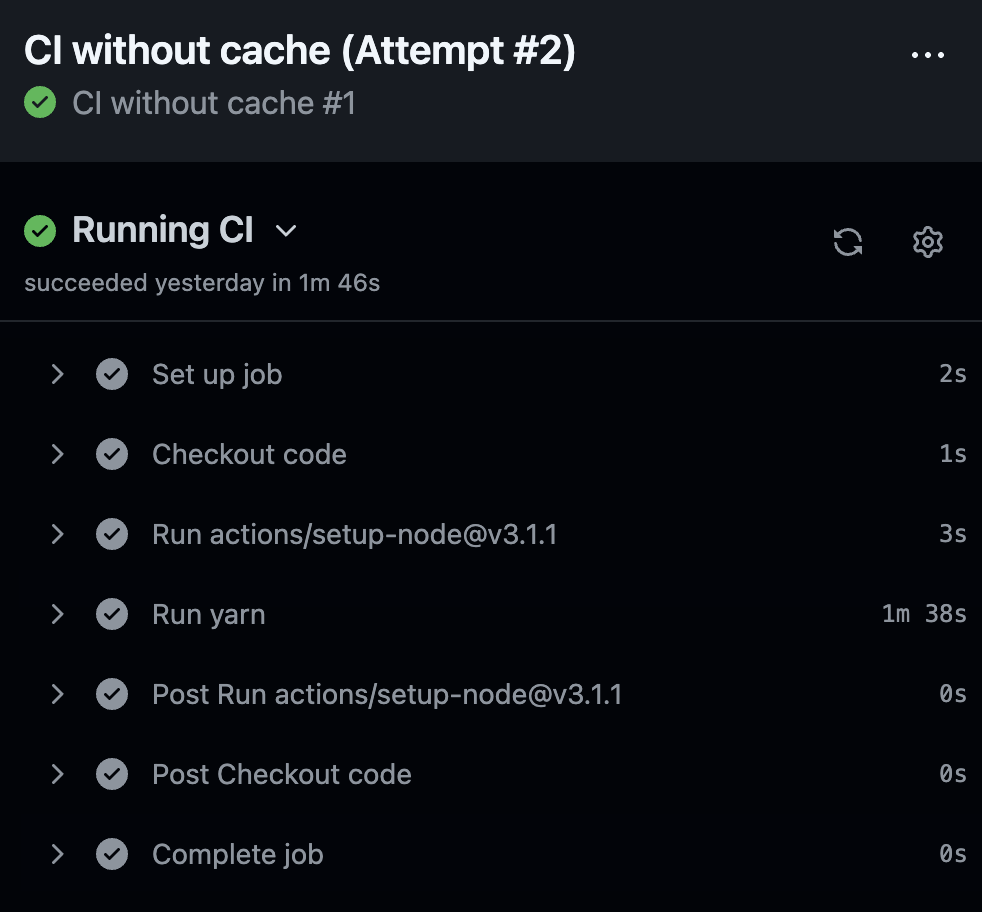

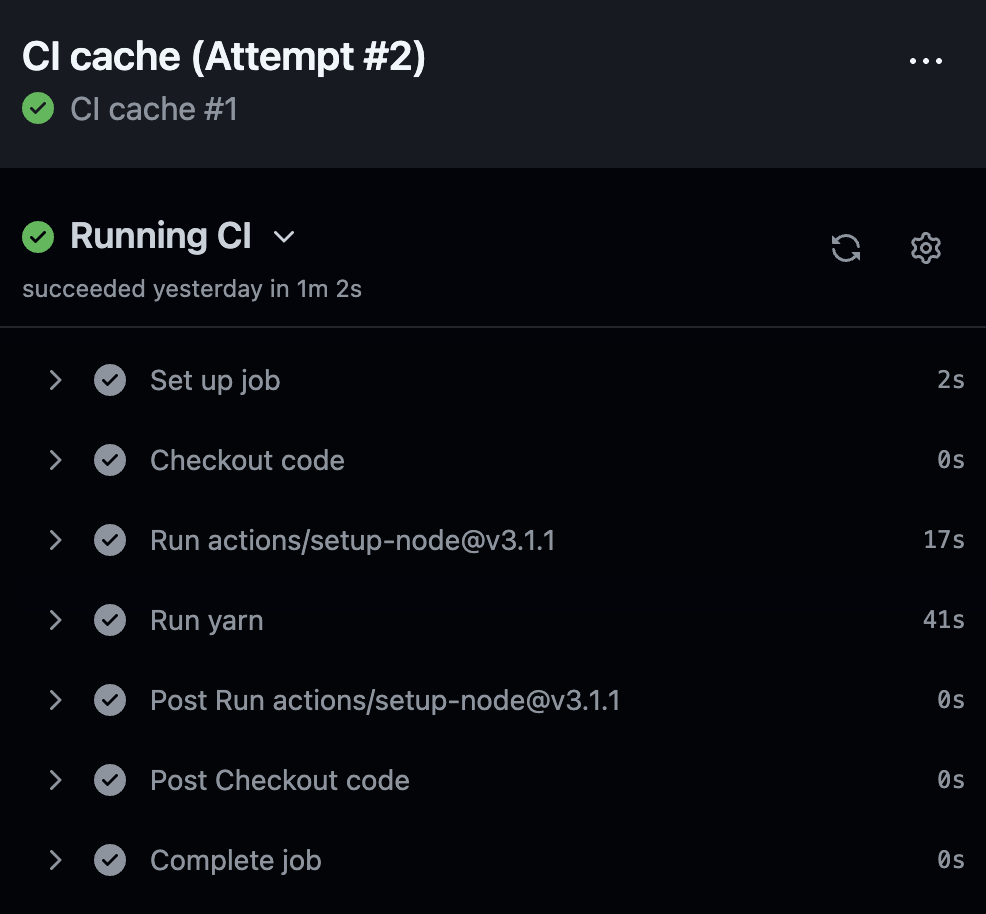

The Result: Faster CI

The Result: Faster CI

With this simple addition, we saw immediate performance improvements in our CI pipeline. But what if we could push the boundaries even further?

Going Beyond: Advanced Caching for Node Modules

While the caching mechanism above brings notable improvements, there is still room to optimize further, particularly when the Node.js version remains consistent across pipeline runs. In this case, we don’t want to recompile the dependencies every time.

Here’s how we optimized the pipeline even further by introducing a cache for the node_modules directory, tied to the Node.js version:

name: CI cache advance

on: workflow_dispatch

jobs:

ci:

name: Running CI

runs-on: ubuntu-latest

steps:

- name: Checkout code

uses: actions/checkout@v3.0.2

- uses: actions/setup-node@v3.1.1

with:

node-version: v16.14.2

- name: Get node version

id: node

run: |

echo "::set-output name=version::$(node -v)"

- name: Get node_modules cache

uses: actions/cache@v3.0.2

id: node_modules

with:

path: |

**/node_modules

# Adding node version as cache key

key: ${{ runner.os }}-node_modules-${{ hashFiles('**/yarn.lock') }}-${{ steps.node.outputs.version }}

- run: yarn install

- run: yarn test

What’s Different Here?

- Get Node Version: We retrieve the current Node.js version and store it as an output (steps.node.outputs.version).

-

Cache Key Based on Node.js Version: The cache key includes the OS, yarn.lock hash, and Node.js version to ensure that dependencies are only rebuilt when necessary. This prevents re-compiling dependencies unless there is a version mismatch.

The Impact: CI on Steroids

By refining the caching strategy even further, the workflow time was significantly reduced. Let’s summarize the improvements:

- Normal CI: 1m 46s (baseline)

- Cache CI: 1m 2s (-41.51% improvement)

-

Cache CI (Advanced): 23s (-79.3% improvement)

These optimizations resulted in a 79% reduction in time spent on the CI process, leaving developers more time for coding and improving productivity.

Why Does Yarn Install Still Take Time?

You may notice that even after enabling caching, the yarn install step still takes time. This happens because dependencies need to be recompiled to work with a specific Node.js version. However, by caching node_modules based on the Node.js version, we further reduced this overhead in subsequent builds.

Conclusion: Speed Up Your CI/CD with Caching

Caching is one of the simplest yet most effective strategies for improving CI/CD performance. By caching dependencies and Node.js modules, we significantly sped up the CI process at OOZOU. The reduction in installation time not only improves developer experience but also makes continuous delivery faster and more efficient.

While we have shown how to optimize CI workflows using caching, there's still more to explore in CD optimization, which we will cover in future articles. Stay tuned for more insights on how we enhance our deployment processes.

Here's the sample repo

FAQs

1. What is CI/CD?

CI/CD stands for Continuous Integration (CI) and Continuous Deployment (CD). CI is the process of automating the integration of code changes from multiple contributors into a single project. CD automates the delivery of integrated code to production.

CI/CD stands for Continuous Integration (CI) and Continuous Deployment (CD). CI is the process of automating the integration of code changes from multiple contributors into a single project. CD automates the delivery of integrated code to production.

2. Why is caching important in CI/CD?

Caching in CI/CD reduces redundant tasks like installing dependencies or compiling assets, which can significantly speed up the workflow. By storing and reusing results, pipelines can execute faster, improving overall efficiency.

Caching in CI/CD reduces redundant tasks like installing dependencies or compiling assets, which can significantly speed up the workflow. By storing and reusing results, pipelines can execute faster, improving overall efficiency.

3. How does GitHub Actions improve CI/CD workflows?

GitHub Actions provides automation tools for setting up, testing, and deploying projects. It offers actions like actions/cache to optimize pipelines by caching dependencies, which reduces time spent on repeated tasks.

GitHub Actions provides automation tools for setting up, testing, and deploying projects. It offers actions like actions/cache to optimize pipelines by caching dependencies, which reduces time spent on repeated tasks.

4. Can caching be applied to other package managers apart from Yarn?

Yes, GitHub Actions support caching for various package managers such as npm, pip, Maven, and more. The cache strategy can be adapted to suit the requirements of different package managers.

Yes, GitHub Actions support caching for various package managers such as npm, pip, Maven, and more. The cache strategy can be adapted to suit the requirements of different package managers.

5. How does caching help with dependency management in CI?

Caching dependencies ensures that once they are installed and compiled, they are stored and reused in future runs, reducing the need to re-download and re-install them, which saves time.

Caching dependencies ensures that once they are installed and compiled, they are stored and reused in future runs, reducing the need to re-download and re-install them, which saves time.

6. Is it safe to cache node_modules?

Yes, it is safe to cache node_modules, provided you ensure that the cache key is tied to relevant factors like the Node.js version or yarn.lock hash to avoid potential mismatches or issues.

Yes, it is safe to cache node_modules, provided you ensure that the cache key is tied to relevant factors like the Node.js version or yarn.lock hash to avoid potential mismatches or issues.

7. How can caching impact deployment (CD) performance?

While this article focuses on CI optimization, caching can also be applied in CD processes by storing build artifacts or Docker layers, which can further reduce deployment times.

While this article focuses on CI optimization, caching can also be applied in CD processes by storing build artifacts or Docker layers, which can further reduce deployment times.