Your users care about your application's availability, and that is a function of the application infrastructure design and the durability of the platform on which it's hosted. Let's start by defining these two terms.

Durability

Durability is the measurement of how resistant a component is to failure. In terms of application infrastructure, this is most often related to the underlying platform, infrastructure, or the hardware on which your software is hosted. It is sometimes measured as a percentage - the closer it is to 100%, the more durable it is. Other times it may be measured as Mean Time Between Failures (MTBF) in hours. Luckily in the age of cloud computing, durability is managed almost entirely by your cloud provider. And that's a good thing because the costs associated with increased durability lies on a logarithmic scale.

Availability

Availability in this context is defined as the measure of how likely something is to be ready for use. For software, this is usually measured as a percentage of application uptime over a period of time. For example, an application that is 99.995% available over a one year period calculates to no more than 0.005% or roughly a half-hour of anticipated downtime over 12 months. Service Level Agreements (SLAs) for hosted applications often contain this calculation in terms of contractual uptime.

Single Points of Failure

When you are building software, especially applications that serve a community, you should be planning for the unknowns. It's not that you need to dedicate significant time to defining the possible unknowns, but rather the inevitability that something, somewhere, will break down. Depending on your audience and the criticality of your service, application, or data, you can think in terms of how much availability your application needs. There is a direct correlation between the amount of availability and the cost, so consider it carefully.

For instance, does your application:

For instance, does your application:

- serve your business in a single time zone, Monday through Friday from 9:00 am until 5:00 pm?

- provide global customer or partner access 24 by 7?

- need to accommodate seasonal or periodic peak usage?

- have legal or contractual compliance requirements - like disaster recovery or business continuity?

Despite the growing number of services and managed service offerings from cloud providers, you're likely to be using only a few at any given time, and also likely to be consuming services within the categories of compute, storage, and database. I'm going to be using AWS (Amazon Web Service) in my examples, but there are direct similarities with Google Cloud Platform and Microsoft Azure. Let's have a look at each of these in the context of a cloud deployment.

Compute

The server or instance on which your application runs already has many redundancies built-in. Elastic Compute Cloud (EC2) servers have redundant power, network, and CPU, for example. Instances represent the raw server components such as CPU and memory, and there is a vast list of AMI (AWS Machine Image) templates from which to choose, ranging from pre-configured OS to fully-installed applications.

While these instance configurations help to offset the chance of failure, they are still only one instance and thus remain a risk for single points of failure. Adding another layer of redundancy here can help reduce that risk. For example, deploying an Application Load Balancer (ALB) in front of two or more instances running your application mitigates a single point of failure at the server level. The ALB can monitor the health of all your instances and route traffic around a problematic instance. The ALB is also an integral component for providing auto-scaling, which can follow custom rules to scale-out or add instances so your application can accommodate both predicted and unexpected peaks in traffic, and subsequently scale-in or remove instances when loads return to normal. Load balancing, in general, can be configured for a single Availability Zone (AZ) which forms a highly-available set of resources spanning two or more physical data centers with redundant power, network, and environmental management. For even more redundancy, you can configure your application to span multiple AZs within a geographic region for additional durability. Keep in mind that cost scales along with a wider footprint.

The net-net:

- The number and type of instances can be configured easily and can change over time.

- Adding load balancing mitigates the risk of single points of failure.

- Auto-scaling helps you manage your costs by automating the addition/subtraction of instances to accommodate your traffic and load.

Storage

There are several points to consider about storage when designing for availability. While your application is running, it is consuming local storage resources on the instance(s) where it is hosted. This is the most volatile storage and should be considered disposable in the event that you happen to lose an instance. Non-critical data and metadata loss such as temporary storage are usually survivable. However, cloud applications must ensure that critical data is stored on less volatile sources. On AWS, for example, a self-hosted database would benefit from being stored on EBS (Elastic Block Store), somewhat analogous to a hard drive or SSD that is mounted on the instance. EBS volumes are replicated within an AZ by default and can be easily and automatically backed up via snapshot and restored and used when spinning up new instances.

Next, there's the Elastic File System (EFS) service, most closely resembling a NAS (Network Attached Storage) for use when multiple instances or services need to access the same file data - logs, directories, repositories, documents, etc.) It automatically scales out and in to accommodate growing and shrinking data needs without any manual intervention. It can be configured to span multiple AZs.

Finally, there's the Simple Storage Service (S3) which can store just about anything. It is an object-level storage service with extremely high durability and availability. S3 is ideal for any objects that require long-term storage and have pricing tiers that accommodate the type of access needed. S3 is often used to store application assets, binary data, database backups, storage snapshots, and other static content. It scales automatically and is charged based on the volume of data stored each month. It has many other benefits including global caching, long-term archiving, individual object permissions and policies, and even static web site hosting.

The net-net:

- Choose your storage needs based on the type of data you need to store

- Pick a service level based on how you will access your data (immediate, infrequent, archival, etc.)

- Backup your data on a frequency that maximizes your ability to recover within an acceptable time frame

Database

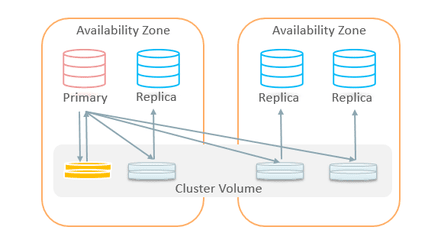

There are really two main strategies for databases in the cloud - you can either host them yourself (on one or more of your instances, for example), or leverage a fully-managed database service. You may consider using a managed service such as one of the many flavors of RDS (Relational Database Service). There are no instances to manage and scale and RDS services are by default highly available and can be configured for multi-AZ deployments. With backups to S3 for example, your data is ready to be restored to any location in the event of a catastrophic loss in any given region. If you decide to manage your own database (e.g., hosted locally on an EC2 instance), you would need to manage your own availability challenges, such as replication, master/slave setup, storage, backups and restores, as well as software patching and security management. With RDS, this is handled almost entirely within a fully-managed service. The added cost of RDS should be considered against the amount of manual effort to keep your own application up and running.

The net-net:

- Using a managed service provides high-availability out of the box

- Trade-offs are mostly about cost: manual effort vs. convenience

- If your data matters to you, don't take any chances - backups, security, scaling

Takeaway

There is little excuse for deploying a production application environment that is little more than a "gold copy" of the local development from whence it came. Cloud platforms offer a basic set of tools and services that make it easy to build a highly-available infrastructure for your applications. There are plenty of real-world examples, templates, and references to make this much less painful to implement.

While highly-available application deployments may cost more, they significantly reduce the risk of data loss and application service disruption. However, in the economy of scale for cloud provider services today, these costs are often nominal and easily folded into project budgets. It's the cost of not making your apps highly-available that should be of highest concern.

While highly-available application deployments may cost more, they significantly reduce the risk of data loss and application service disruption. However, in the economy of scale for cloud provider services today, these costs are often nominal and easily folded into project budgets. It's the cost of not making your apps highly-available that should be of highest concern.

Ready to start your project? Contact Us